Cloud Containerization: What It Means for App Scalability

In today’s fast-paced digital landscape, application scalability is no longer a luxury – it’s a necessity. Businesses need to be able to rapidly adapt to fluctuating demands, whether it’s handling a sudden surge in user traffic during a flash sale or scaling down resources during off-peak hours to minimize costs. Traditional application architectures, often monolithic and tightly coupled, struggle to meet these dynamic requirements. This is where cloud containerization steps in, offering a flexible and efficient solution for achieving unparalleled scalability.

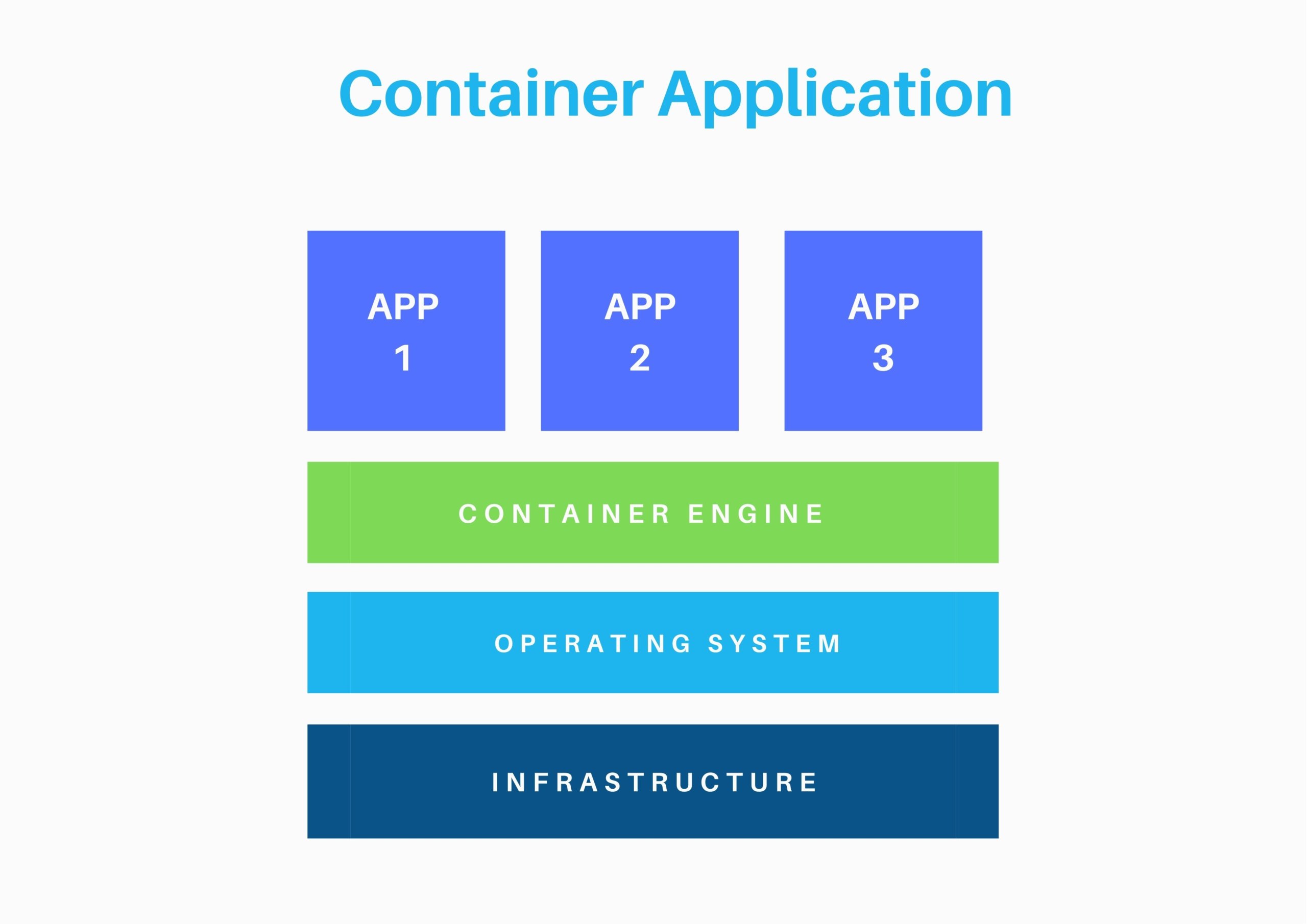

Cloud containerization, at its core, is a technology that packages an application and all its dependencies – libraries, frameworks, configuration files – into a standardized unit called a container. These containers are lightweight, portable, and isolated from the underlying operating system, allowing them to run consistently across different environments, from a developer’s laptop to a large-scale cloud infrastructure. This inherent portability and isolation are key to unlocking the scalability potential that modern businesses demand.

This article delves into the world of cloud containerization and explores how it revolutionizes application scalability. We’ll examine the fundamental concepts of containerization, discuss its benefits for scalability, and explore the various cloud platforms and tools that support containerized deployments. By the end of this article, you’ll have a comprehensive understanding of how cloud containerization can empower your organization to build and deploy applications that are highly scalable, resilient, and cost-effective.

Understanding Cloud Containerization

Cloud containerization isn’t just about packaging applications; it’s about fundamentally changing how applications are built, deployed, and managed. It’s a paradigm shift that leverages the power of the cloud to provide unparalleled flexibility and scalability. To truly understand its impact, let’s break down the core concepts.

What are Containers?

Imagine a shipping container. It holds everything an application needs to run – the code, runtime, system tools, system libraries, and settings. This container is isolated from other containers and the host operating system, ensuring that the application runs consistently regardless of the underlying infrastructure. Docker is the most popular containerization platform, providing the tools and technologies to build, package, and run containers.

Key Benefits of Containerization

Beyond portability, containerization offers several key benefits:

- Isolation: Containers isolate applications from each other, preventing conflicts and ensuring that one application’s failure doesn’t impact others.

- Lightweight: Containers are significantly smaller and more lightweight than virtual machines (VMs), as they share the host operating system’s kernel. This allows for faster startup times and higher resource utilization.

- Consistency: Containers ensure that applications run consistently across different environments, from development to testing to production.

- Resource Efficiency: Containers consume fewer resources than VMs, allowing you to run more applications on the same hardware.

Container Orchestration: The Key to Scalability

While individual containers offer many benefits, managing a large number of containers manually can be challenging. This is where container orchestration platforms like Kubernetes come in. Kubernetes automates the deployment, scaling, and management of containerized applications. It allows you to define the desired state of your application – the number of replicas, resource limits, and networking configuration – and Kubernetes will automatically ensure that the application meets those specifications.

How Cloud Containerization Enhances App Scalability

The combination of containerization and cloud computing is a powerful force for application scalability. Let’s examine the specific ways in which cloud containerization enables applications to scale effectively.

Horizontal Scaling Made Easy

Horizontal scaling, also known as scaling out, involves adding more instances of an application to handle increased load. Cloud containerization makes horizontal scaling incredibly easy. With container orchestration platforms like Kubernetes, you can simply increase the number of container replicas, and the platform will automatically deploy and manage the new instances. This allows you to quickly respond to sudden spikes in traffic without impacting application performance.

Dynamic Resource Allocation

Cloud platforms offer dynamic resource allocation, meaning you can adjust the resources allocated to your containers based on their actual needs. Kubernetes can automatically scale container resources up or down based on metrics like CPU utilization and memory consumption. This ensures that your applications always have the resources they need to perform optimally, while also minimizing costs by avoiding over-provisioning.

Microservices Architecture and Containerization

Containerization is a perfect fit for microservices architectures. Microservices are small, independent, and loosely coupled services that work together to form a larger application. Each microservice can be packaged as a separate container and deployed independently. This allows you to scale individual microservices based on their specific needs, rather than scaling the entire application. For example, if one microservice is experiencing high traffic, you can scale it independently without affecting the performance of other microservices.

Faster Deployment Cycles

Cloud containerization enables faster deployment cycles. Because containers are portable and isolated, you can deploy new versions of your application more quickly and reliably. Continuous integration and continuous delivery (CI/CD) pipelines can be easily integrated with containerization platforms, automating the process of building, testing, and deploying containerized applications. This allows you to release new features and bug fixes more frequently, improving the overall agility of your organization.

Improved Fault Tolerance and Resilience

Container orchestration platforms like Kubernetes provide built-in fault tolerance and resilience. If a container fails, Kubernetes will automatically restart it. If a node in the cluster fails, Kubernetes will automatically reschedule the containers running on that node to other healthy nodes. This ensures that your application remains available even in the face of hardware failures or other unexpected events.

Cloud Platforms and Containerization

Several cloud platforms offer robust support for containerization, providing the infrastructure and tools needed to build, deploy, and manage containerized applications. Let’s explore some of the most popular options.

Amazon Web Services (AWS)

AWS offers several containerization services, including:

- Amazon Elastic Container Service (ECS): A fully managed container orchestration service that makes it easy to run, scale, and manage Docker containers on AWS.

- Amazon Elastic Kubernetes Service (EKS): A fully managed Kubernetes service that allows you to run Kubernetes on AWS without managing the underlying infrastructure.

- AWS Fargate: A serverless compute engine for containers that allows you to run containers without managing servers or clusters.

Microsoft Azure

Azure provides the following containerization services:

- Azure Kubernetes Service (AKS): A managed Kubernetes service that simplifies the deployment, management, and scaling of Kubernetes clusters in Azure.

- Azure Container Instances (ACI): A serverless container offering that allows you to run containers on Azure without managing VMs or clusters.

- Azure Container Apps: A fully managed serverless container execution environment that enables you to build and deploy modern applications at scale.

Google Cloud Platform (GCP)

GCP offers the following containerization services:

- Google Kubernetes Engine (GKE): A managed Kubernetes service that provides a production-ready environment for deploying, managing, and scaling containerized applications on GCP.

- Cloud Run: A fully managed serverless platform that allows you to run containers without managing infrastructure.

Best Practices for Scaling Containerized Applications

While cloud containerization provides the foundation for application scalability, it’s important to follow best practices to ensure that your applications are truly scalable and resilient.

Monitor and Optimize Resource Utilization

Continuously monitor the resource utilization of your containers and optimize resource allocation accordingly. Use monitoring tools to track CPU utilization, memory consumption, and network traffic. Adjust resource limits and requests to ensure that your containers have the resources they need without being over-provisioned. The industry is rapidly adopting new technologies, so understanding the nuances of Cloud Native Cloud is crucial for modern software development practices

Implement Auto-Scaling

Leverage the auto-scaling capabilities of your container orchestration platform to automatically scale your application based on real-time demand. Configure auto-scaling rules based on metrics like CPU utilization, memory consumption, and request latency.

Design for Fault Tolerance

Design your application to be fault-tolerant. Implement retry mechanisms, circuit breakers, and other patterns to handle failures gracefully. Use health checks to monitor the health of your containers and automatically restart unhealthy containers. To ensure optimal performance and scalability, we must Use Cloud Load balancing techniques to distribute traffic efficiently

Optimize Container Images

Optimize your container images to reduce their size and improve startup times. Use multi-stage builds to create smaller images. Remove unnecessary dependencies and files from your images. Modern businesses are increasingly reliant on technology, Cloud Solutions enabling greater flexibility and scalability

.

Use a Service Mesh

Consider using a service mesh like Istio or Linkerd to manage the complexity of microservices deployments. Service meshes provide features like traffic management, security, and observability.

Conclusion

Cloud containerization is a game-changer for application scalability. By packaging applications and their dependencies into portable, isolated containers, businesses can achieve unparalleled flexibility, efficiency, and resilience. The ability to horizontally scale, dynamically allocate resources, and deploy microservices with ease makes cloud containerization a critical component of modern application architectures. As businesses increasingly rely on cloud-native technologies, understanding and embracing cloud containerization is essential for success in today’s dynamic digital landscape.

Choosing the right cloud platform and container orchestration tools is crucial, but equally important is adopting best practices for monitoring, optimization, and fault tolerance. By following these guidelines, organizations can unlock the full potential of cloud containerization and build applications that are truly scalable, resilient, and cost-effective.

The future of application development and deployment is undoubtedly containerized. Embracing this technology and investing in the skills and tools needed to manage containerized environments will position your organization for long-term success in the cloud.

Frequently Asked Questions (FAQ) about Cloud Containerization: What It Means for App Scalability

How does using cloud containerization improve the scalability of my applications?

Cloud containerization significantly enhances application scalability through several key mechanisms. Firstly, containers package an application and its dependencies together, ensuring consistent performance across different environments. This isolation means you can easily deploy multiple instances of your application without conflicts. Secondly, cloud platforms like AWS, Azure, and Google Cloud offer robust orchestration tools (e.g., Kubernetes) that automate the scaling process. These tools can automatically increase or decrease the number of container instances based on real-time demand, optimizing resource utilization and ensuring your application can handle traffic spikes. Finally, the lightweight nature of containers allows for faster startup times compared to virtual machines, enabling rapid scaling when needed. Understanding the specifics of a Cloud Service Level agreement is crucial for businesses relying on remote resources

What are the key differences between scaling applications with containers vs. traditional virtual machines in the cloud, particularly regarding resource utilization and speed?

Scaling applications with containers offers significant advantages over traditional virtual machines (VMs) in terms of resource utilization and speed. VMs require a full operating system for each instance, consuming considerable CPU, memory, and storage resources even when idle. Containers, on the other hand, share the host operating system kernel, resulting in a much smaller footprint. This means you can run more container instances on the same hardware compared to VMs, leading to better resource utilization and reduced infrastructure costs. Furthermore, containers have significantly faster startup times (often measured in seconds) compared to VMs (which can take minutes), enabling quicker scaling responses to fluctuating demand. This speed is crucial for maintaining application performance during peak loads.

What are some best practices for implementing cloud containerization to ensure optimal application scalability and avoid common pitfalls related to resource management and security?

To ensure optimal application scalability with cloud containerization, several best practices should be followed. Firstly, implement robust resource management by setting resource limits (CPU, memory) for each container to prevent resource contention and ensure fair allocation. Secondly, adopt a microservices architecture to break down large applications into smaller, independently scalable services. Thirdly, use container orchestration tools like Kubernetes to automate deployment, scaling, and management of containers. Fourthly, prioritize security by regularly scanning container images for vulnerabilities and implementing network policies to restrict communication between containers. Finally, monitor your containerized applications closely using tools that provide insights into resource utilization, performance, and errors. This proactive approach helps identify and address potential scalability bottlenecks and security risks before they impact your application’s performance.